Hardware

Firmware: v1.8

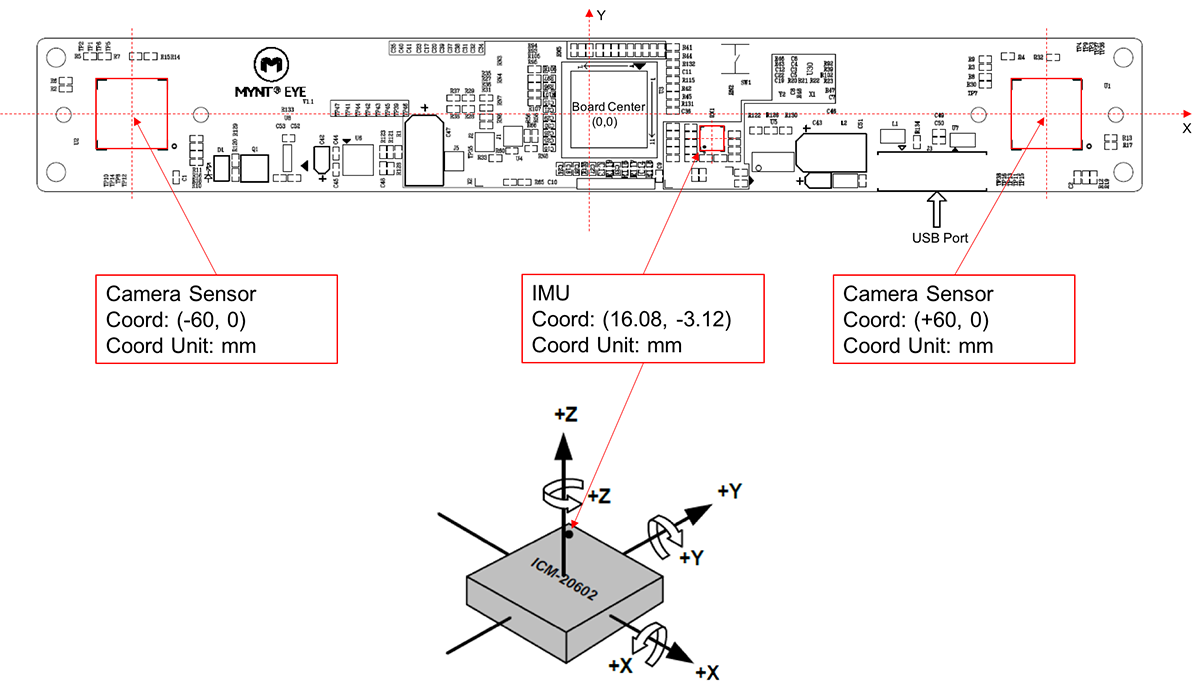

IMU has 3-axis accelerometer and 3-axis gyroscope to detect linear

acceleration and rotational rate. IMU sampling rate is 250 Hz.

IMU Rotation and Translation

IMU Rotation matrix (Right-handed coordinate system, RUB

(right-up-back), rotate 90° around the z-axis):

\[\left[ {\matrix{

0&{ - 1}&0 \cr

1&0&0 \cr

0&0&1

} } \right]\]

Translation vector from camera to IMU (in meter):

Camera Left

[ 0.07608, -0.00312, -0.01464]

Camera Right

[-0.04392, -0.00312, -0.01464]

The value of z-axis is from the center of the lens to the board. The

deviation maybe ±0.25mm because of the difference in focal length.

IMU Noise Density and Random

Walk

Accelerometer

7.6509e-02

5.3271e-02

Gyroscope

9.0086e-03

5.5379e-05

Install Opencv 3.4

1 2 3 4 5 6 7 8 mkdir build cd build cmake -D CMAKE_BUILD_TYPE=Release -D CMAKE_INSTALL_PREFIX=/usr/local -D WITH_TBB=ON -D BUILD_EXAMPLES=OFF -D BUILD_DOCS=OFF -D BUILD_PERF_TESTS=OFF -D BUILD_TESTS=OFF -D WITH_GTK_2_X=ON .. make -j8 sudo make install sudo /bin/bash -c 'echo "/usr/local/lib" > /etc/ld.so.conf.d/opencv.conf' sudo ldconfig

Install MYNT-EYE-OKVIS-Sample

1 2 3 4 5 6 7 8 sudo apt-get install cmake libgoogle-glog-dev libatlas-base-dev libeigen3-dev libsuitesparse-dev libboost-dev libboost-filesystem-dev git clone https://github.com/ethz-asl/okvis.git mkdir build && cd build cmake -DCMAKE_BUILD_TYPE=Release .. make -j8 make install

Build Kalibr with catkin

1 2 3 4 5 cd ~/catkin_ws/src git clone https://github.com/ethz-asl/Kalibr.git cd .. catkin_make -DCMAKE_BUILD_TYPE="Release" -j4 source ./devel/setup.sh

Calibrate Camera and IMU

Steps

Calibration targets

You can download from the website or

created by yourself followed the instructions

Get dataset of stereo camera and IMU.

1 2 cd mynt-eye-okvis-sample/build ./okvis_app_getcameraimucalibdataset 0 ./cameraimu/cam0/ ./cameraimu/cam1/ ./cameraimu/imu0.csv

./cameraimu/cam0 is the folder to store left images,

./camera/cam0 is the folder to store right images,

./cameraimu/imu0.csv is the file to store the data of

imu.

Use kalibrbagcreate creat calibration bag of stereo camera and imu.

First move the folder of camera to kalibr's workspace, then creat

calibration bag.

1 2 3 cd ~/catkin_ws/ source ./devel/setup.sh kalibr_bagcreater --folder cameraimu/. --output-bag cameraimucalib.bag

Calibrate stereo camera

1 kalibr_calibrate_cameras --target april_5x8.yaml cameraimucalib.bag --models pinhole-radtan pinhole-radtan --topics /cam0/image_raw /cam1/image_raw

april_5x8.yaml is the config file of calibration target

which looks like:

1 2 3 4 5 target_type: 'aprilgrid' #gridtype tagCols: 5 #number of apriltags tagRows: 8 #number of apriltags tagSize: 0.025 #size of apriltag, edge to edge [m] tagSpacing: 0.3 #ratio of space between tags to tagSize

The file will looks like if using checkerboard:

1 2 3 4 5 targetType: 'checkerboard' #gridtype targetCols: 6 #number of internal chessboard corners targetRows: 7 #number of internal chessboard corners rowsMetricSize: 0.06 #size of one chessboard square [m] colsMetricSize: 0.06 #size of one chessboard square [m]

Camera stereo camera and imu

1 kalibr_calibrate_imu_camera --target april_5x8.yaml --cam cameracalib.yaml --imu imu.yaml --bag cameraimucalib.bag --bag-from-to 0 72

cameracalib.yaml is the config file of stereo camera

generated by step 4, imu.yaml is config file of imu in

mynt-eye-okvis-sample/config, from-to 0 72

means that it use bag from 0s to 72s to calibration.

Results

The Yaml formats of the calibration file can been seen from here .

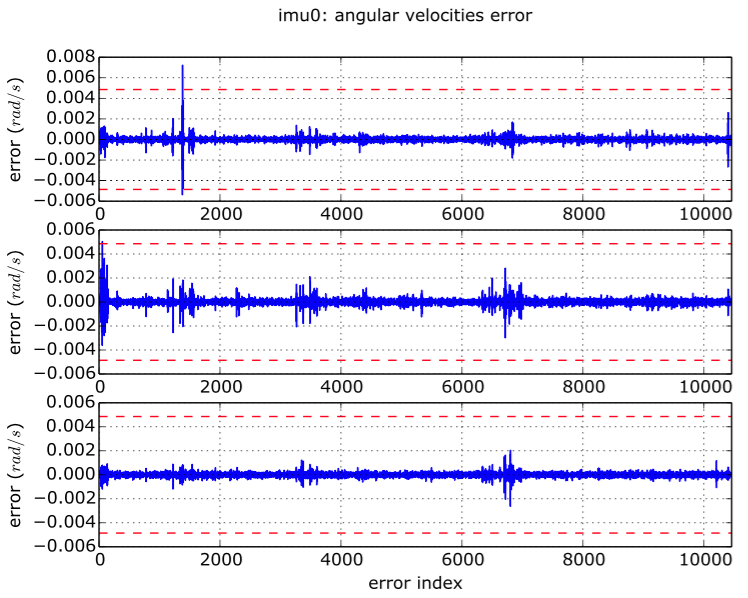

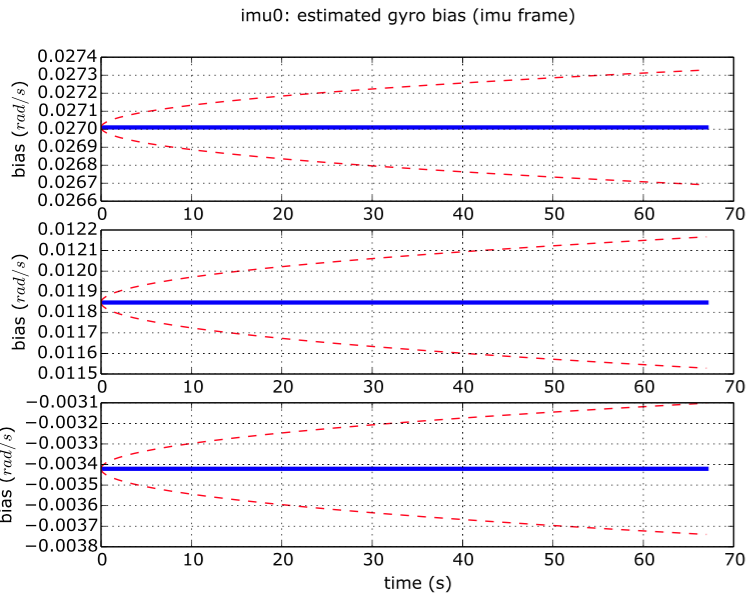

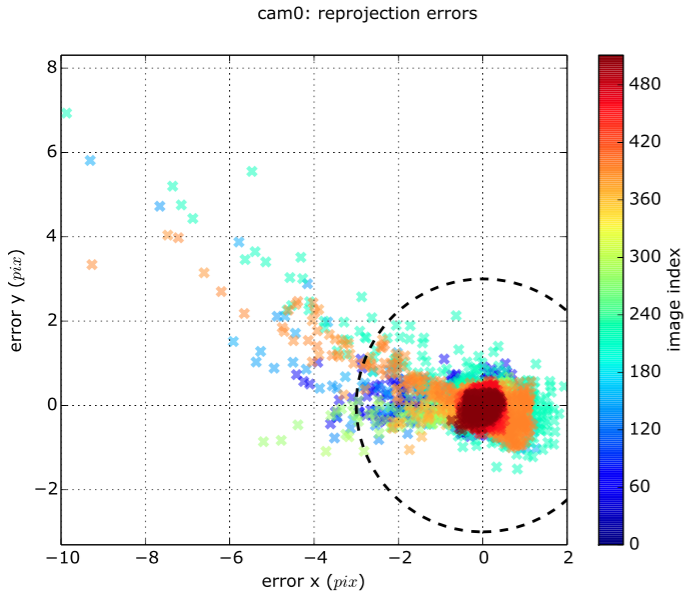

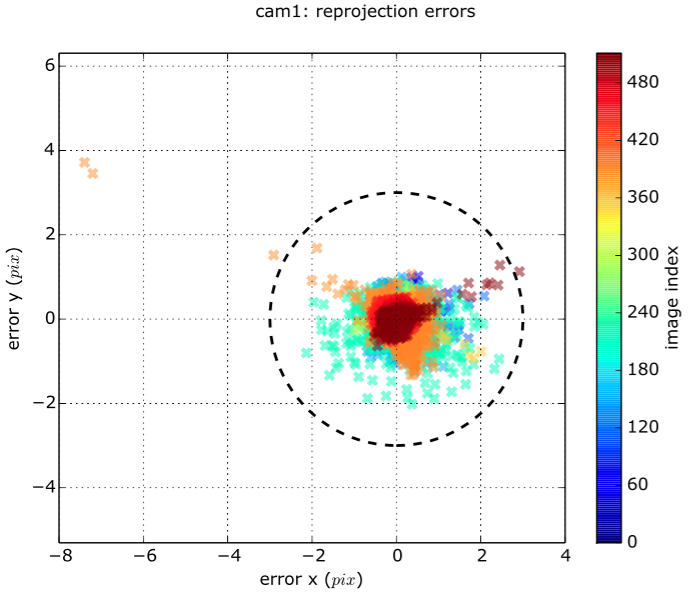

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 Calibration results =================== Normalized Residuals ---------------------------- Reprojection error (cam0): mean 0.246874712924, median 0.217537207629, std: 0.25184628687 Reprojection error (cam1): mean 0.195955751013, median 0.175831842512, std: 0.140312508567 Gyroscope error (imu0): mean 0.174678800713, median 0.142828483069, std: 0.187200875016 Accelerometer error (imu0): mean 0.132150523822, median 0.108954584935, std: 0.106560662224 Residuals ---------------------------- Reprojection error (cam0) [px]: mean 0.246874712924, median 0.217537207629, std: 0.25184628687 Reprojection error (cam1) [px]: mean 0.195955751013, median 0.175831842512, std: 0.140312508567 Gyroscope error (imu0) [rad/s]: mean 0.000282774195232, median 0.000231214143852, std: 0.000303045226801 Accelerometer error (imu0) [m/s^2]: mean 0.00348953062143, median 0.00287702499756, std: 0.00281381172859 Transformation (cam0): ----------------------- T_ci: (imu0 to cam0): [[ 0.04341751 0.99896022 0.01390713 -0.00252296] [-0.99902347 0.04329777 0.00879867 0.00163378] [ 0.00818737 -0.01427556 0.99986458 -0.0060169 ] [ 0. 0. 0. 1. ]] T_ic: (cam0 to imu0): [[ 0.04341751 -0.99902347 0.00818737 0.00179099] [ 0.99896022 0.04329777 -0.01427556 0.0023637 ] [ 0.01390713 0.00879867 0.99986458 0.0060368 ] [ 0. 0. 0. 1. ]] timeshift cam0 to imu0: [s] (t_imu = t_cam + shift) 0.0 Transformation (cam1): ----------------------- T_ci: (imu0 to cam1): [[ 0.04587416 0.99870286 0.02209422 -0.12418407] [-0.99892623 0.04571856 0.00749699 0.00192461] [ 0.00647715 -0.02241441 0.99972778 -0.00529794] [ 0. 0. 0. 1. ]] T_ic: (cam1 to imu0): [[ 0.04587416 -0.99892623 0.00647715 0.00765369] [ 0.99870286 0.04571856 -0.02241441 0.12381624] [ 0.02209422 0.00749699 0.99972778 0.00802582] [ 0. 0. 0. 1. ]] timeshift cam1 to imu0: [s] (t_imu = t_cam + shift) 0.0 Baselines: ---------- Baseline (cam0 to cam1): [[ 0.99996344 -0.00239335 0.00820977 -0.12160789] [ 0.00240439 0.99999622 -0.00133526 0.00028887] [-0.00820654 0.00135495 0.99996541 0.00069583] [ 0. 0. 0. 1. ]] baseline norm: 0.121610228073 [m] Gravity vector in target coords: [m/s^2] [ 7.91261884 0.21833681 -5.7888872 ] Calibration configuration ========================= cam0 ----- Camera model: pinhole Focal length: [441.99817968009677, 441.66869887034943] Principal point: [369.9738561699648, 234.13190157824815] Distortion model: radtan Distortion coefficients: [-0.2974398881998292, 0.08194961900031578, 0.00022897719568841816, -0.00012227166858536044] Type: aprilgrid Tags: Rows: 8 Cols: 5 Size: 0.025 [m] Spacing 0.0075 [m] cam1 ----- Camera model: pinhole Focal length: [441.6397246072589, 441.52814086273514] Principal point: [344.95454482822214, 263.0207032045381] Distortion model: radtan Distortion coefficients: [-0.3086004799815589, 0.0952146365061291, -0.00035306500466706703, 0.000399686074862005] Type: aprilgrid Tags: Rows: 8 Cols: 5 Size: 0.025 [m] Spacing 0.0075 [m] IMU configuration ================= IMU0: ---------------------------- Model: calibrated Update rate: 500 Accelerometer: Noise density: 0.0011809 Noise density (discrete): 0.0264057267463 Random walk: 8.2583e-05 Gyroscope: Noise density: 7.2396e-05 Noise density (discrete): 0.00161882377299 Random walk: 1.3e-05 T_i_b [[ 1. 0. 0. 0.] [ 0. 1. 0. 0.] [ 0. 0. 1. 0.] [ 0. 0. 0. 1.]] time offset with respect to IMU0: 0.0 [s]

cali

cali

cali

cali